Published on November 7, 2018

A General-Purpose Framework for Defining and Solving Meaningful Problems in 3 Steps

Imagine the following scenario: your boss asks you to build a machine learning model to predict every month which customers of your subscription service will churn during the month with churn defined as no active membership for more than 31 days. You painstakingly make labels by finding historical examples of churn, brainstorm and engineer features by hand, then train and manually tune a machine learning model to make predictions.

Pleased with the metrics on the holdout testing set, you return to your boss with the results, only to be told now you must develop a different solution: one that makes predictions every two weeks with churn defined as 14 days of inactivity. Dismayed, you realize none of your previous work can be reused because it was designed for a single prediction problem.

You wrote a labeling function for a narrow definition of churn and the downstream steps in the pipeline — feature engineering and modeling — were also dependent on the initial parameters and will have to be redone. Due to hard-coding a specific set of values, you’ll have to build an entirely new pipeline to address for what is only a small change in problem definition.

Structuring the Machine Learning Process

This situation is indicative of how solving problems with machine learning is currently approached. The process is ad-hoc and requires a custom solution for each parameter set even when using the same data. The result is companies miss out on the full benefits of machine learning because they are limited to solving a small number of problems with a time-intensive approach.

A lack of standardized methodology means there is no scaffolding for solving problems with machine learning that can be quickly adapted and deployed as parameters to a problem change.

How can we improve this process? Making machine learning more accessible will require a general-purpose framework for setting up and solving problems. This framework should accommodate existing tools, be rapidly adaptable to changing parameters, applicable across different industries, and provide enough structure to give data scientists a clear path for laying out and working through meaningful problems with machine learning.

At Feature Labs, we’ve put a lot of thought into this issue and developed what we think is a better way to solve useful problems with machine learning. In the next three parts of this series, I’ll lay out how we approach framing and building machine learning solutions in a structured, repeatable manner built around the steps of prediction engineering, feature engineering, and modeling.

We’ll walk through the approach as applied in full to one use case — predicting customer churn — and see how we can adapt the solution if the parameters of the problem change. Moreover, we’ll be able to utilize existing tools — Pandas, Scikit-Learn, Featuretools — commonly used for machine learning.

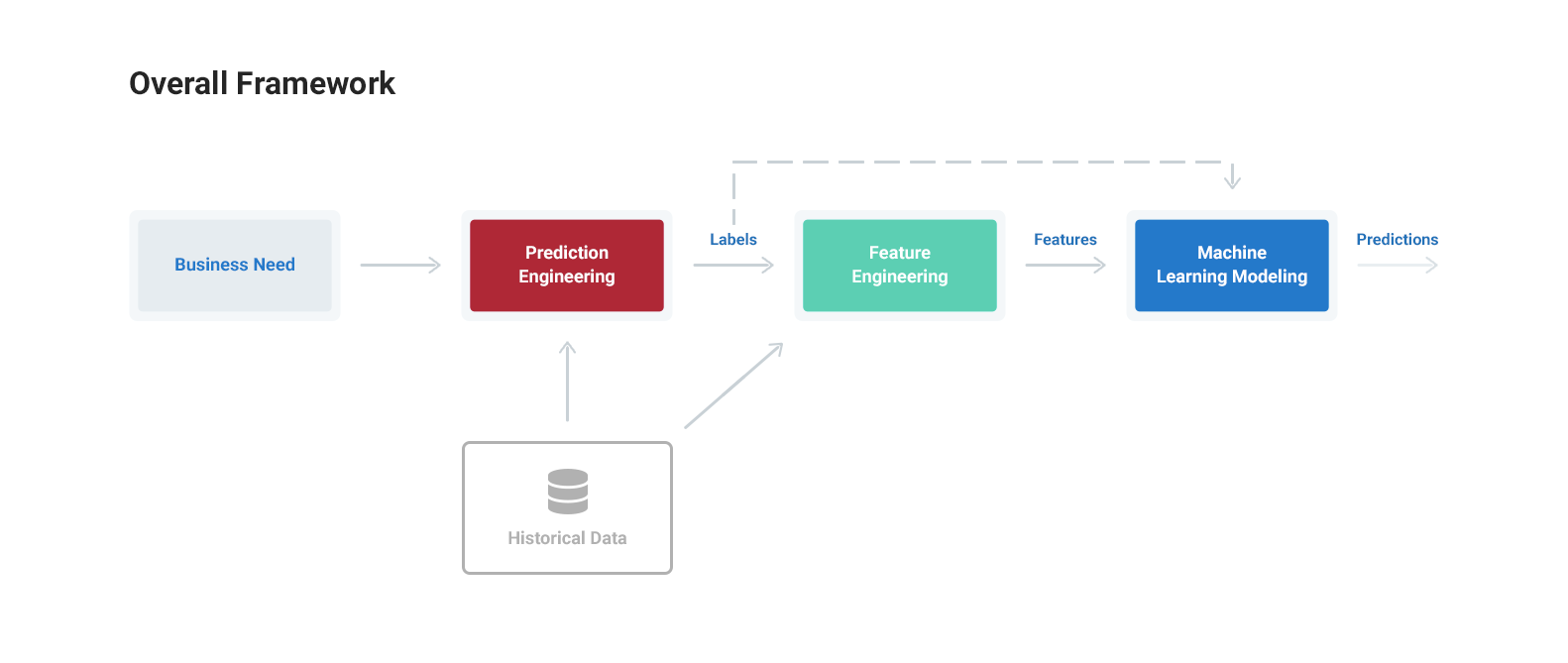

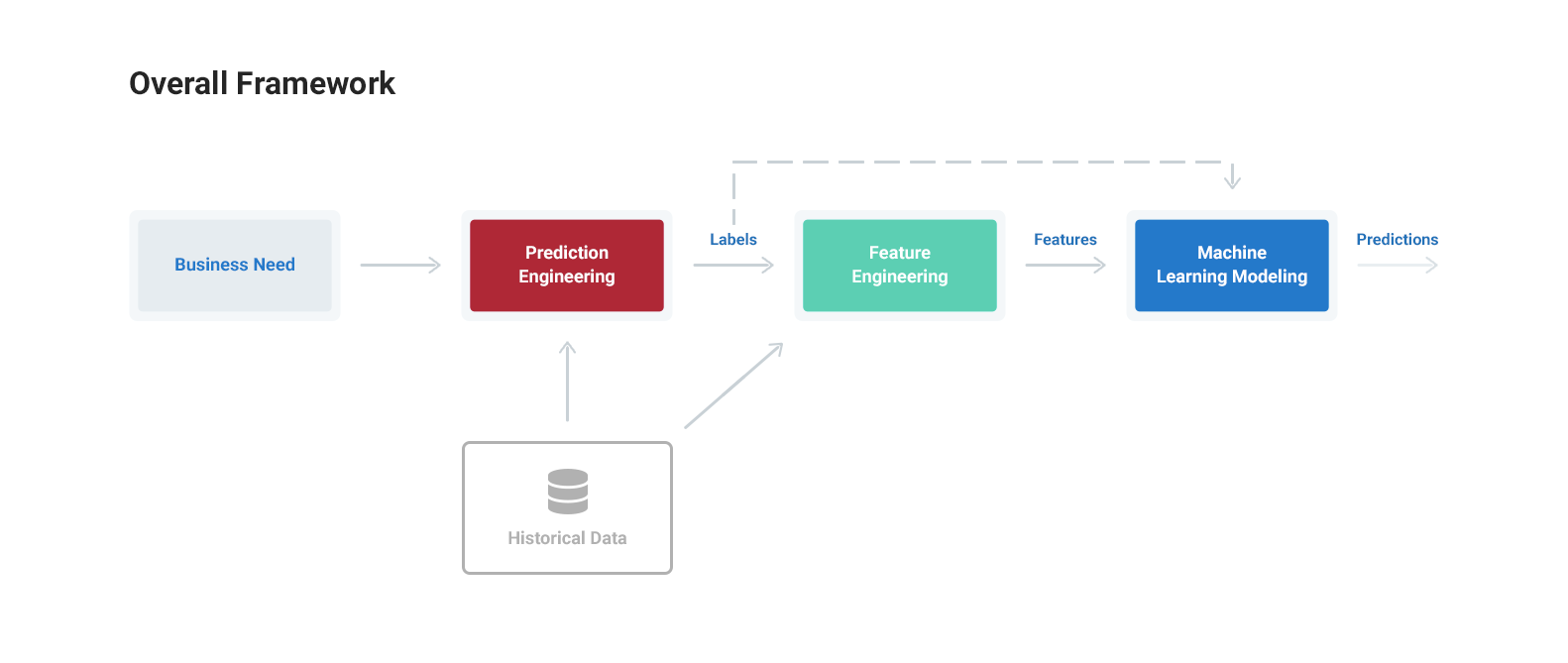

The general machine learning framework is outlined below:

- Prediction Engineering: State the business need, translate into a machine learning problem, and generate labeled examples from a dataset

- Feature Engineering: Extract predictor variables — features — from the raw data for each of the labels

- Modeling: Train a machine learning model on the features, tune for the business need, and validate predictions before deploying to new data

A general-purpose framework for defining and solving meaningful problems with machine learning

A general-purpose framework for defining and solving meaningful problems with machine learning

A general-purpose framework for defining and solving meaningful problems with machine learning

A general-purpose framework for defining and solving meaningful problems with machine learning (

(